V-HOP: Visuo-Haptic 6D Object Pose Tracking

We fuse visual and haptic sensing to achieve accurate real-time in-hand object tracking.

Read our preprint on arXiv

Abstract

Humans naturally integrate vision and haptics for robust object perception during manipulation. The loss of either modality significantly degrades performance. Inspired by this multisensory integration, prior object pose estimation research has attempted to combine visual and haptic/tactile feedback. Although these works demonstrate improvements in controlled environments or synthetic datasets, they often underperform vision-only approaches in real-world settings due to poor generalization across diverse grippers, sensor layouts, or sim-to-real environments. Furthermore, they typically estimate the object pose for each frame independently, resulting in less coherent tracking over sequences in real-world deployments. To address these limitations, we introduce a novel unified haptic representation that effectively handles multiple gripper embodiments. Building on this representation, we introduce a new visuo-haptic transformer-based object pose tracker that seamlessly integrates visual and haptic input. We validate our framework in our dataset and the Feelsight dataset, demonstrating significant performance improvement on challenging sequences. Notably, our method achieves superior generalization and robustness across novel embodiments, objects, and sensor types (both taxel-based and vision-based tactile sensors). In real-world experiments, we demonstrate that our approach outperforms state-of-the-art visual trackers by a large margin. We further show that we can achieve precise manipulation tasks by incorporating our real-time object tracking result into motion plans, underscoring the advantages of visuo-haptic perception. Our model and dataset will be made open source upon acceptance of the paper. Project website: this https URL

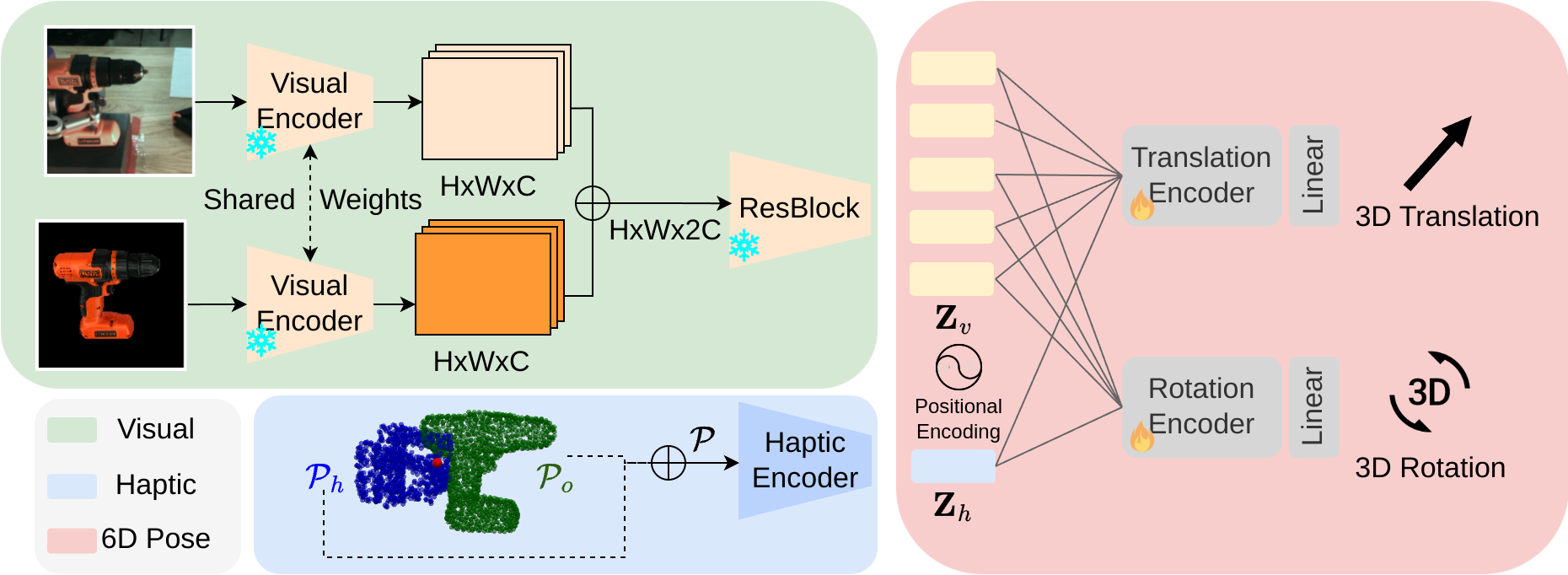

Methodology

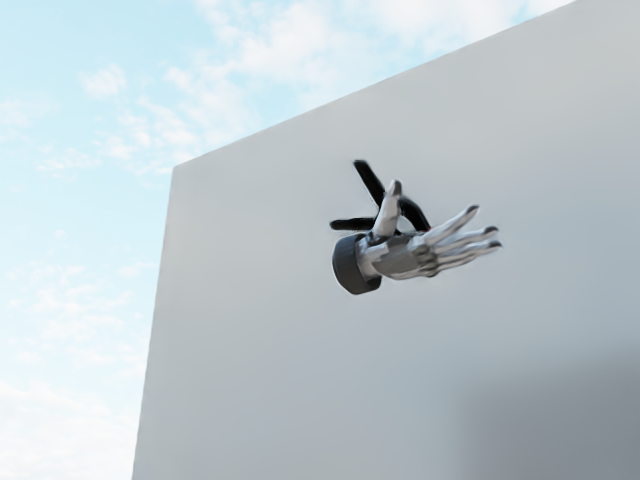

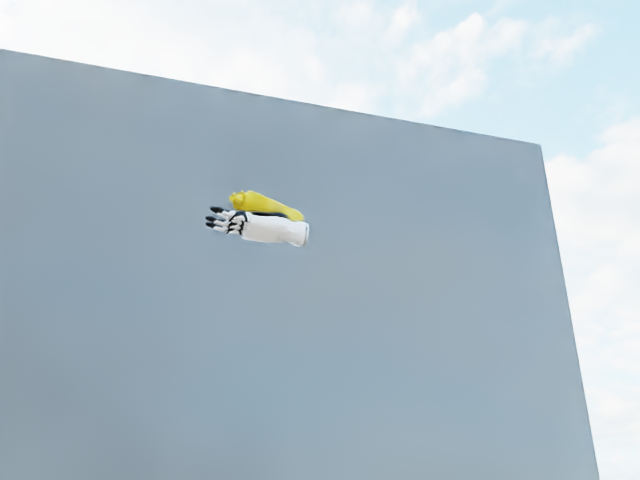

Dataset Visualization

Experiment Video

Citing V-HOP

@misc{li2025vhop,

title={V-HOP: Visuo-Haptic 6D Object Pose Tracking},

author={Hongyu Li and Mingxi Jia and Tuluhan Akbulut and Yu Xiang and George Konidaris and Srinath Sridhar},

year={2025},

eprint={2502.17434},

archivePrefix={arXiv},

primaryClass={cs.RO},

url={https://arxiv.org/abs/2502.17434},

}

Ackowledgements

This work is supported by the National Science Foundation (NSF) under CAREER grant #2143576, grant #2346528, and ONR Grant #N00014-22-1-259. We thank Ying Wang, Tao Lu, Zekun Li, and Xiaoyan Cong for their valuable discussions.

Code coming soon!

Related Works

2025

- RSS

V-HOP: Visuo-Haptic 6D Object Pose TrackingIn Robotics: Science and Systems (RSS), 2025Oral presentation at New England Manipulation Symposium (NEMS).

V-HOP: Visuo-Haptic 6D Object Pose TrackingIn Robotics: Science and Systems (RSS), 2025Oral presentation at New England Manipulation Symposium (NEMS).

Presented at ICRA 2025 ViTac workshop.

2024

2023

- RA-L / ICRA

ViHOPE: Visuotactile In-Hand Object 6D Pose Estimation with Shape CompletionIEEE Robotics and Automation Letters, 2023Unfortunately, we are unable to publish the code and the dataset per the company policy.

ViHOPE: Visuotactile In-Hand Object 6D Pose Estimation with Shape CompletionIEEE Robotics and Automation Letters, 2023Unfortunately, we are unable to publish the code and the dataset per the company policy.

Presented at ICRA 2024 in Yokohama, Japan .

.

Presented at NeurIPS 2023 Workshop on Touch Processing in New Orleans, LA .

.