Hongyu Li (李鸿宇)

Welcome! I am a second year Ph.D. student in Computer Science at Brown University. I work with Prof. Srinath Sridhar at Interactive 3D Vision & Learning Lab. I’m also working as an Applied Scientist intern at Amazon Robotics advised by Prof. Taskin Padir.

My research interests revolve around the convergence of computer vision, machine learning, and robotics, particularly in the field of robot perception (vision and touch) and planning. Perception and planning play a crucial role in various robotics domains, and I am currently focused on developing deep learning models for environment and object interaction.

Before joining Brown University, I worked with Prof. Huaizu Jiang and Prof. Taskin Padir at Northeastern University. I also did two research internships at Honda Research Institute, focusing on visuotactile perception under the guidance of Dr. Nawid Jamali and Dr. Soshi Iba.

I serve as a reviewer for conferences such as ICRA, IROS, CVPR, ECCV, CHI, and journals including RA-L, Neurocomputing, TCSVT, among others.

News

| Jul 1, 2024 | Four papers (including one RA-L) are accepted at IROS 2024, including one oral presentation. |

|---|---|

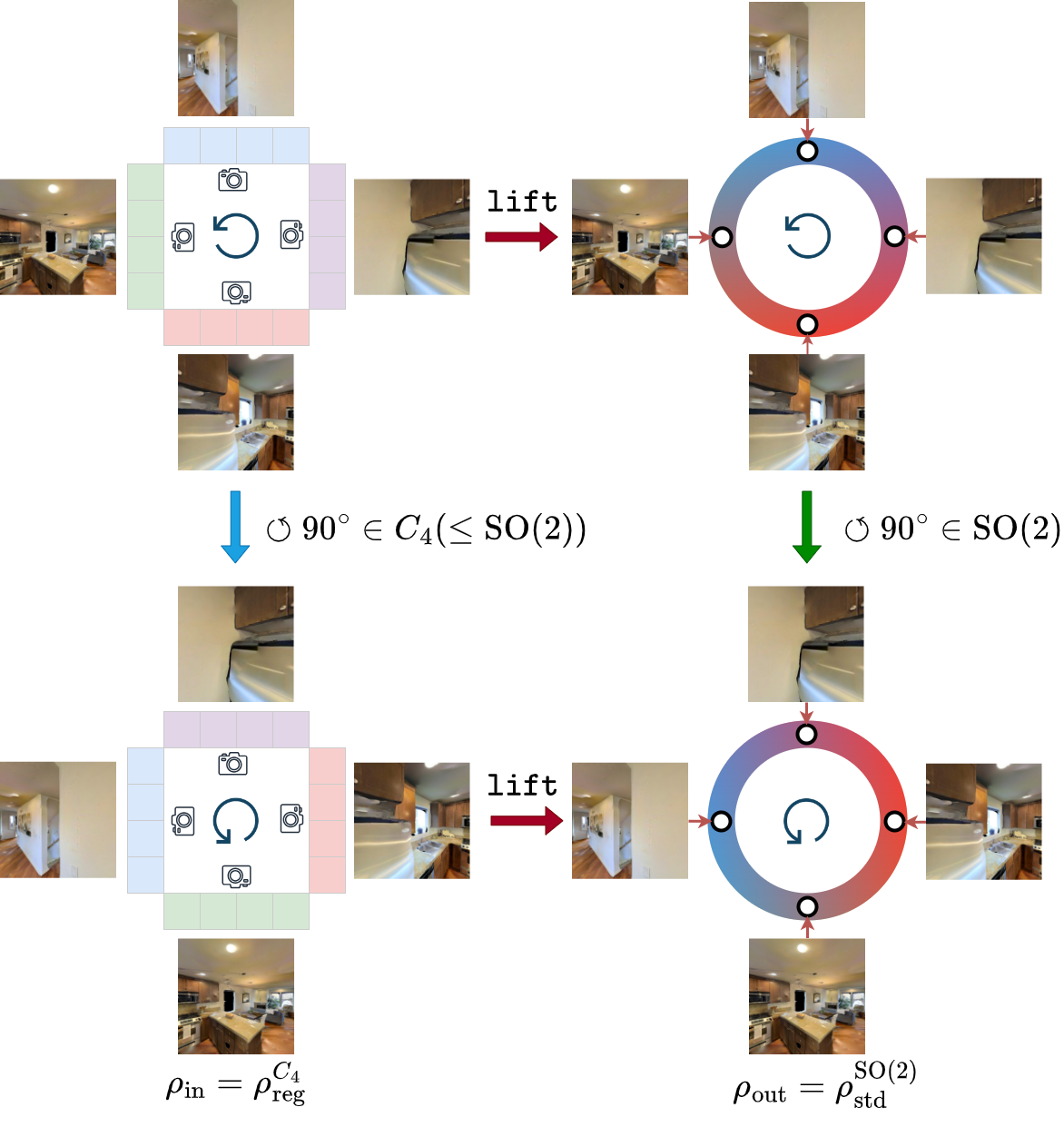

| Jan 20, 2024 | Our work E(2)-Equivariant Graph Planning for Navigation is accepted to RA-L. See you in Abu Dhabi |

| Aug 25, 2023 | Our work ViHOPE is accepted to RA-L. See you in Yokohama |

| May 15, 2023 | I receive a $1,300 RAS travel grant for ICRA 2023. |

| Apr 17, 2023 | I will present our work in progress, StereoNavNet: Learning to Navigate using Stereo Camera with Auxiliary Occupancy Voxels, at CVPR 2023 3D Vision and Robotics in Vancouver |

Selected Publications

Symbol * or † represents equal contribution or advising.2024

- IROS

HyperTaxel: Hyper-Resolution for Taxel-Based Tactile Signal Through Contrastive LearningIn IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2024

HyperTaxel: Hyper-Resolution for Taxel-Based Tactile Signal Through Contrastive LearningIn IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2024 - IROS

2023

- RA-L / ICRA

ViHOPE: Visuotactile In-Hand Object 6D Pose Estimation with Shape CompletionIEEE Robotics and Automation Letters, 2023Presented at ICRA 2024 in Yokohama, Japan

ViHOPE: Visuotactile In-Hand Object 6D Pose Estimation with Shape CompletionIEEE Robotics and Automation Letters, 2023Presented at ICRA 2024 in Yokohama, Japan .

.

Presented at NeurIPS 2023 Workshop on Touch Processing in New Orleans, LA .

.